A Foundation for Success: Modernized Tools Help Expand Tuberculosis Studies

Agencywide investments are helping to handle more medical record data than ever before

The third phase of the Tuberculosis Epidemiologic Studies Consortium (TBESC-III) is exploring ways to improve testing and treatment for latent tuberculosis (TB) infection. People with latent TB infection do not feel sick and cannot spread TB bacteria to others. However, without treatment, people with latent TB infection may develop TB disease.

The consortium seeks to improve screening for TB infection, as well as treatment outcomes. Specifically, they want to know:

- How is latent TB infection screening, testing, and treatment offered to patients in primary care settings?

- What is the impact of primary care interventions that are designed to increase the number of eligible patients who are tested for TB infection and who complete treatment for latent TB infection?

To find the answers to these questions, the consortium built on the work of the past. The previous two phases of TBESC’s work involved close collaboration among CDC, academic institutions, and health departments. For this third phase, the consortium formed new partnerships with four major primary care sites in Denver; Seattle and King County, Washington; and Northern California.

Organizations participating in TBESC-III include:

- Denver Health and Hospital Authority

- Kaiser Foundation Research Institute

- Public Health – Seattle & King County

- The Regents of the University of California, San Francisco

- RTI International

This was an exciting step, but it also meant that they would need to receive and analyze data from electronic medical record (EMR) systems, which posed some challenges.

The challenges

Routinely collecting EMR data from all four sites meant that the team would have to build a pipeline capable of processing a huge amount of data – more than 500K patients and 12 million records at baseline.

Additionally, in past phases of TBESC, the different steps of data collection, quality assurance and control (QA/QC), data cleaning, and analytics required lots of staff time with many manual steps and one-off, custom code. This process wouldn’t work with the larger incoming datasets.

The team also had a need for speed. They needed to be able to provide a fast response back to the participating sites because EMR data are constantly changing. For example, if a patient goes to the doctor today, their medical record tomorrow will be different from the record yesterday. The faster the team can run the QA/QC steps, the faster they can re-pull data if needed, and the less each patient’s data in the EMR will have changed.

The solution

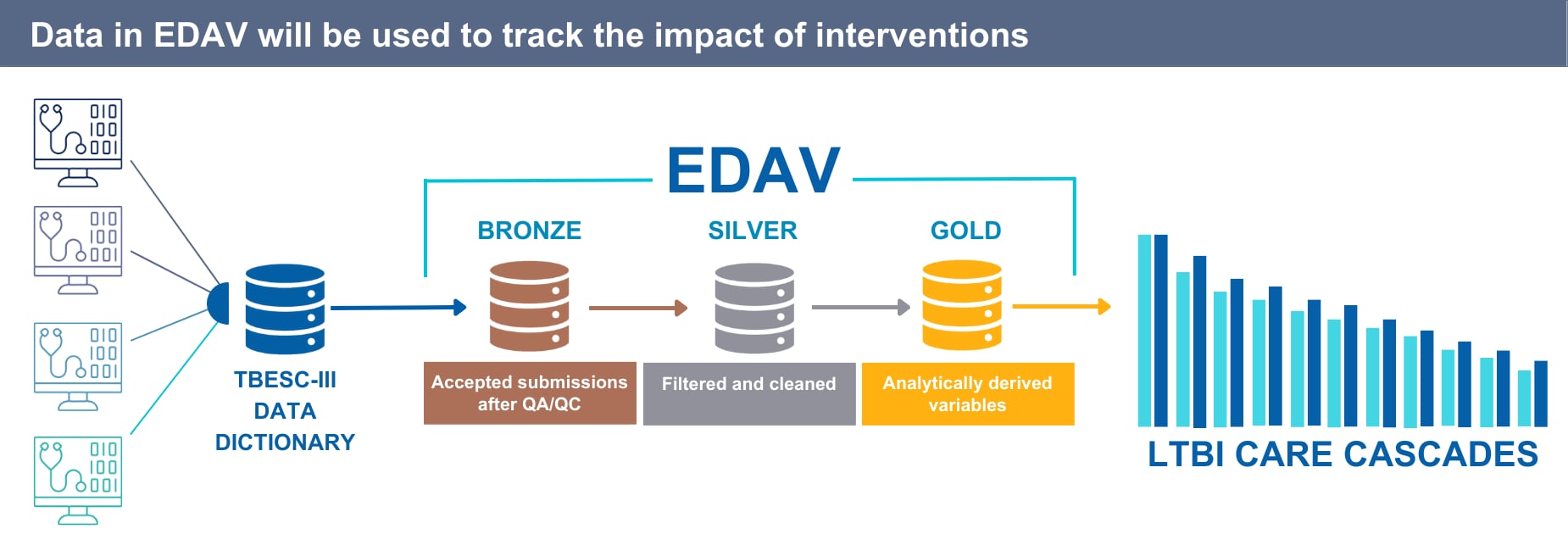

After exploring the options and consulting with CDC’s informatics office, the team selected the agency’s new Enterprise Data Analytics and Visualization (EDAV) platform for their data pipeline. The EDAV platform was recently built as part of CDC’s Data Modernization Initiative. It’s a cloud-based set of technology, tools, and resources used to simplify data workflows and save time throughout the data process, from data collection to visualization and action.

EMR data from four separate sources will feed into the TBESC-III data dictionary and then, through EDAV, through three different levels: Bronze (accepted submissions after QA/QC); Silver (filtered and cleaned); and Gold (analytically derived variables). The Gold variables will then be used to generate LTBI (latent tuberculosis infection) care cascades.

EDAV offered the capabilities to process the massive amount of EMR data quickly and accurately. Once the team’s proposal was reviewed and accepted by CDC’s IT and Data Governance Board, they began building the data pipeline.

Today, the completed pipeline provides technical capabilities that make the work better and faster. For example:

- A “data dictionary” that contains more than 200 variables, including metadata, patient data, visits, diagnostics, imaging, prescribing, dispensing, and diagnostic codes

- A real-time QA/QC process that identifies data issues: the largest submission to date contained nearly 13 million records; the pipeline produced results in 1 hour after running ~200 checks

- A cleaning step that automatically addresses minor QA/QC issues

- An analytic code to generate a latent tuberculosis infection cascade of care

- Access to Power BI to visualize the results

The benefits

The team is now able to successfully tap into modernized, agency-wide resources created through CDC’s Data Modernization Initiative to make this new phase of work happen.

Better data automation and data readiness allow CDC staff to respond more quickly, review data in real time, and ultimately report information back to sites. Because of this, they can more efficiently monitor the impact of interventions to determine whether they improve patient testing and treatment outcomes.