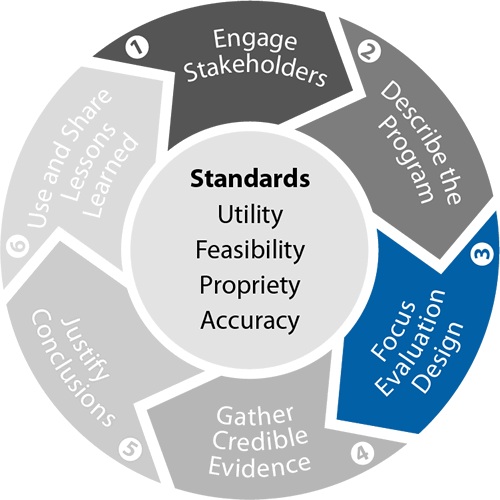

Program Evaluation Framework Checklist for Step 3

Focus the Evaluation

‹View Table of Contents

In Step 2 you described the entire program, but usually the entire program is not the focus of a given evaluation. Step 3 is a systematic approach to determining where to focus this evaluation, this time. Where the focus lies in the logic model is determined, in conjunction with stakeholders, through application of some of the evaluation standards. While there are more than 30 standards, the most important ones fall into the following four clusters:

- Utility: Who needs the information from this evaluation and how will they use it?

- Feasibility: How much money, time, skill, and effort can be devoted to this evaluation?

- Propriety: Who needs to be involved in the evaluation to be ethical?

- Accuracy: What design will lead to accurate information?

The standards help you assess and choose among options at every step of the framework, but some standards are more influential for some steps than others. The two standards most important in setting the focus are “utility” and “feasibility.” Ensure that all stakeholders have common understandings of the phases (formative/summative) and types of evaluations (needs assessment/process/outcome/impact).

Using the logic model, think through where you want to focus your evaluation, using the principles in the “utility” standard:

- Purpose(s) of the evaluation: implementation assessment, accountability, continuous program improvement, generate new knowledge, or some other purpose

- User(s): the individuals or organizations that will employ the evaluation findings

- Use(s): how will users employ the results of the evaluation, e.g., make modifications as needed, monitor progress toward program goals, make decisions about continuing/refunding

- Review and refine the purpose, user, and use with stakeholders, especially those who will use the evaluation findings

Identify the program components that should be part of the focus of the evaluation, based on the utility discussion:

- Specific activities that should be examined

- Specific outcomes that should be examined

- Specific pathways from activities to specific outcomes or outcomes to more distal outcomes

- Specific inputs or moderating factors that may or may not have played a role in success or failure of the program

Refine/expand the focus to include additional areas of interest, if any, identified in Steps 1 and 2

- Does the focus address key issues of interest to important stakeholders?

- Did the program description discussion identify issues in the program logic that may influence the program logic?

- Are issues of cost, efficiency, and/or cost-effectiveness important to some or all stakeholders?

Refine/expand the focus to include additional areas of interest based on the propriety and accuracy evaluation standards

- Are there components of the program—activities, outcomes, pathways, or inputs/moderators that must be included for reasons of “ethics” or propriety?

- Are there components of the program—activities, outcomes, pathways, or inputs/moderators that must be included to ensure that the resulting focus is “accurate”?

“Reality check” the expanded focus using the principles embedded in the “feasibility” evaluation standard

- The program’s stage of development: Is the focus appropriate given how long the program has been in existence?

- Program intensity: Is the focus appropriate given the size and scope of the program, even at maturity?

- Resources: Has a realistic assessment of necessary resources been done? If so, are there sufficient resources devoted to the evaluation to address the most desired items in the evaluation focus?

At this point the focus may still be expressed in very general terms—this activity, this outcome, this pathway. Now, convert those into more specific evaluation questions. Some examples of evaluation questions are:

- Was [specific] activity implemented as planned?

- Did [specific] outcomes occur and at an acceptable level?

- Were the changes in [specific] outcomes due to activities as opposed to something else?

- What factors prevented the activities in the focus from being implemented as planned? Were [specific inputs and moderating factors] responsible?

- What factors prevented (more) progress on the outcomes in the focus? Were [specific moderating factors] responsible?

- What was the cost for implementing the activities?

- What was the cost-benefit or cost-effectiveness of the outcomes that were achieved?

Consider the most appropriate evaluation design, using the four evaluation standards—especially utility and feasibility—to decide on the most appropriate design. The three most common designs are:

- Experimental: Participants are randomly assigned to either the experimental or control group. Only the experimental group gets the intervention. Measures of the outcomes of interest are (usually) taken before and after the intervention in both groups.

- Quasi-experimental: Same specifications as an experimental design, except the participants are not randomly assigned to a “comparison” group.

- Non-experimental: Because the assignment of subjects cannot be manipulated by the experimenter, there is no comparison or control group. Hence, other routes must be used to draw conclusions, such as correlation, survey or case study.

Some factors to consider in selecting the most appropriate design include:

- With what level of rigor must decisions about “causal attribution” be made?

- How important is ability to translate the program to other settings?

- How much money and skill are available to devote to implementing the evaluation?

- Are there naturally occurring control or comparison groups? If not, will selection of these be very costly and/or disruptive to the programs being studied?

Start the draft of the evaluation plan. You will complete the plan in Step 4. But at this point begin to populate the measurement table (see example below) with:

- Program component from logic model (activity, outcome, pathway)

- Evaluation question(s) for each component

| Evaluation Questions | Indicators | Data Source(s) | Data Collection Methods |

|---|---|---|---|

Review and refine the evaluation focus and the starter elements of the evaluation plan with stakeholders, especially those who will use the evaluation results.

Checklists for Each Step

- Step 1 Checklist: Engage Stakeholders

- Step 2 Checklist: Describe the Program

- ›Step 3 Checklist: Focus the Evaluation

E-mail: cdceval@cdc.gov