|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||

| ||||||||||

|

|

|

|

|

Persons using assistive technology might not be able to fully access information in this file. For assistance, please send e-mail to: mmwrq@cdc.gov. Type 508 Accommodation and the title of the report in the subject line of e-mail. Updated Guidelines for Evaluating Public Health Surveillance SystemsRecommendations from the Guidelines Working GroupGuidelines Working Group CHAIRMAN ADMINISTRATIVE SUPPORT MEMBERS Greg Armstrong, M.D. Guthrie S. Birkhead, M.D., M.P.H. John M. Horan, M.D., M.P.H. Guillermo Herrera Lisa M. Lee, Ph.D. Robert L. Milstein, M.P.H. Carol A. Pertowski, M.D. Michael N. Waller The following CDC staff members prepared this report: Robert R. German, M.P.H. Lisa M. Lee, Ph.D. John M. Horan, M.D., M.P.H. Robert L. Milstein, M.P.H. Carol A. Pertowski, M.D. Michael N. Waller in collaboration with Guthrie S. Birkhead, M.D., M.P.H. Additional CDC Contributors Office of the Director: Karen E. Harris, M.P.H.; Joseph A. Reid, Ph.D; Gladys H. Reynolds, Ph.D., M.S.; Dixie E. Snider, Jr., M.D., M.P.H. Agency for Toxic Substances and Disease Registry: Wendy E. Kaye, Ph.D.; Robert Spengler, Sc.D. Epidemiology Program Office: Vilma G. Carande-Kulis, Ph.D., M.S.; Andrew G. Dean, M.D., M.P.H.; Samuel L. Groseclose, D.V.M., M.P.H.; Robert A. Hahn, Ph.D., M.P.H.; Lori Hutwagner, M.S.; Denise Koo, M.D., M.P.H.; R. Gibson Parrish, M.D., M.P.H.; Catherine Schenck-Yglesias, M.H.S.; Daniel M. Sosin, M.D., M.P.H.; Donna F. Stroup, Ph.D., M.Sc.; Stephen B. Thacker, M.D., M.Sc.; G. David Williamson, Ph.D. National Center for Birth Defects and Developmental Disabilities: Joseph Mulnaire, M.D., M.S.P.H. National Center for Chronic Disease Prevention and Health Promotion: Terry F. Pechacek, Ph.D; Nancy Stroup, Ph.D. National Center for Environmental Health: Thomas H. Sinks, Ph.D. National Center for Health Statistics: Jennifer H. Madans, Ph.D. National Center for HIV, STD, and TB Prevention: James W. Buehler, M.D.; Meade Morgan, Ph.D. National Center for Infectious Diseases: Janet K. Nicholson, Ph.D; Jose G. Rigau-Perez, M.D., M.P.H. National Center for Injury Prevention and Control: Richard L. Ehrenberg, M.D. National Immunization Program: H. Gay Allen, M.S.P.H.; Roger H. Bernier, Ph.D; Nancy Koughan, D.O., M.P.H., M.H.A.; Sandra W. Roush, M.T., M.P.H. National Institute for Occupational Safety and Health: Rosemary Sokas, M.D., M.O.H. Public Health Practice Program Office: William A. Yasnoff, M.D., Ph.D.

Consultants and Contributors Scientific Workgroup on Health-Related Quality of Life Surveillance Paul Etkind, Dr.P.H., Massachusetts Department of Public Health, Jamaica Plain, Massachusetts; Annie Fine, M.D., New York City Department of Health, New York City, New York; Julie A. Fletcher, D.V.M, M.P.H. candidate, Emory University, Atlanta, Georgia; Daniel J. Friedman, Ph.D., Massachusetts Department of Public Health, Boston, Massachusetts; Richard S. Hopkins, M.D., M.S.P.H., Florida Department of Health, Tallahassee, Florida; Steven C. MacDonald, Ph.D., M.P.H., Washington State Department of Health, Olympia, Washington; Elroy D. Mann, D.V.M., M.Sc., Health Canada, Ottawa, Canada; S. Potjaman, M.D., Government of Thailand, Bangkok, Thailand; Marcel E. Salive, M.D., M.P.H., National Institutes of Health, Bethesda, Maryland. Summary The purpose of evaluating public health surveillance systems is to ensure that problems of public health importance are being monitored efficiently and effectively. CDC's Guidelines for Evaluating Surveillance Systems are being updated to address the need for a) the integration of surveillance and health information systems, b) the establishment of data standards, c) the electronic exchange of health data, and d) changes in the objectives of public health surveillance to facilitate the response of public health to emerging health threats (e.g., new diseases). This report provides updated guidelines for evaluating surveillance systems based on CDC's Framework for Program Evaluation in Public Health, research and discussion of concerns related to public health surveillance systems, and comments received from the public health community. The guidelines in this report describe many tasks and related activities that can be applied to public health surveillance systems. INTRODUCTIONIn 1988, CDC published Guidelines for Evaluating Surveillance Systems (1) to promote the best use of public health resources through the development of efficient and effective public health surveillance systems. CDC's Guidelines for Evaluating Surveillance Systems are being updated to address the need for a) the integration of surveillance and health information systems, b) the establishment of data standards, c) the electronic exchange of health data, and d) changes in the objectives of public health surveillance to facilitate the response of public health to emerging health threats (e.g., new diseases). For example, CDC, with the collaboration of state and local health departments, is implementing the National Electronic Disease Surveillance System (NEDSS) to better manage and enhance the large number of current surveillance systems and allow the public health community to respond more quickly to public health threats (e.g., outbreaks of emerging infectious diseases and bioterrorism) (2). When NEDSS is completed, it will electronically integrate and link together several types of surveillance systems with the use of standard data formats; a communications infrastructure built on principles of public health informatics; and agreements on data access, sharing, and confidentiality. In addition, the Health Insurance Portability and Accountability Act of 1996 (HIPAA) mandates that the United States adopt national uniform standards for electronic transactions related to health insurance enrollment and eligibility, health-care encounters, and health insurance claims; for identifiers for health-care providers, payers and individuals, as well as code sets and classification systems used in these transactions; and for security of these transactions (3). The electronic exchange of health data inherently involves the protection of patient privacy. Based on CDC's Framework for Program Evaluation in Public Health (4), research and discussion of concerns related to public health surveillance systems, and comments received from the public health community, this report provides updated guidelines for evaluating public health surveillance systems. BACKGROUNDPublic health surveillance is the ongoing, systematic collection, analysis, interpretation, and dissemination of data regarding a health-related event for use in public health action to reduce morbidity and mortality and to improve health (5--7). Data disseminated by a public health surveillance system can be used for immediate public health action, program planning and evaluation, and formulating research hypotheses. For example, data from a public health surveillance system can be used to

Public health surveillance activities are generally authorized by legislators and carried out by public health officials. Public health surveillance systems have been developed to address a range of public health needs. In addition, public health information systems have been defined to include a variety of data sources essential to public health action and are often used for surveillance (8). These systems vary from a simple system collecting data from a single source, to electronic systems that receive data from many sources in multiple formats, to complex surveys. The number and variety of systems will likely increase with advances in electronic data interchange and integration of data, which will also heighten the importance of patient privacy, data confidentiality, and system security. Appropriate institutions/agencies/scientific officials should be consulted with any projects regarding pubic health surveillance. Variety might also increase with the range of health-related events under surveillance. In these guidelines, the term "health-related event" refers to any subject related to a public health surveillance system. For example, a health-related event could include infectious, chronic, or zoonotic diseases; injuries; exposures to toxic substances; health promoting or damaging behaviors; and other surveilled events associated with public health action. The purpose of evaluating public health surveillance systems is to ensure that problems of public health importance are being monitored efficiently and effectively. Public health surveillance systems should be evaluated periodically, and the evaluation should include recommendations for improving quality, efficiency, and usefulness. The goal of these guidelines is to organize the evaluation of a public health surveillance system. Broad topics are outlined into which program-specific qualities can be integrated. Evaluation of a public health surveillance system focuses on how well the system operates to meet its purpose and objectives. The evaluation of public health surveillance systems should involve an assessment of system attributes, including simplicity, flexibility, data quality, acceptability, sensitivity, predictive value positive, representativeness, timeliness, and stability. With the continuing advancement of technology and the importance of information architecture and related concerns, inherent in these attributes are certain public health informatics concerns for public health surveillance systems. These concerns include comparable hardware and software, standard user interface, standard data format and coding, appropriate quality checks, and adherence to confidentiality and security standards (9). Because public health surveillance systems vary in methods, scope, purpose, and objectives, attributes that are important to one system might be less important to another. A public health surveillance system should emphasize those attributes that are most important for the objectives of the system. Efforts to improve certain attributes (e.g., the ability of a public health surveillance system to detect a health-related event [sensitivity]) might detract from other attributes (e.g., simplicity or timeliness). An evaluation of the public health surveillance system must therefore consider those attributes that are of the highest priority for a given system and its objectives. Considering the attributes that are of the highest priority, the guidelines in this report describe many tasks and related activities that can be applied in the evaluation of public health surveillance systems, with the understanding that all activities under the tasks might not be appropriate for all systems. Organization of This Report This report begins with descriptions of each of the tasks involved in evaluating a public health surveillance system. These tasks are adapted from the steps in program evaluation in the Framework for Program Evaluation in Public Health (4) as well as from the elements in the original guidelines for evaluating surveillance systems (1). The report concludes with a summary statement regarding evaluating surveillance systems. A checklist that can be detached or photocopied and used when the evaluation is implemented is also included (Appendix A). To assess the quality of the evaluation activities, relevant standards are provided for each of the tasks for evaluating a public health surveillance system (Appendix B). These standards are adapted from the standards for effective evaluation (i.e., utility, feasibility, propriety, and accuracy) in the Framework for Program Evaluation in Public Health (4). Because all activities under the evaluation tasks might not be appropriate for all systems, only those standards that are appropriate to an evaluation should be used. Task A. Engage the Stakeholders in the Evaluation Stakeholders can provide input to ensure that the evaluation of a public health surveillance system addresses appropriate questions and assesses pertinent attributes and that its findings will be acceptable and useful. In that context, we define stakeholders as those persons or organizations who use data for the promotion of healthy lifestyles and the prevention and control of disease, injury, or adverse exposure. Those stakeholders who might be interested in defining questions to be addressed by the surveillance system evaluation and subsequently using the findings from it are public health practitioners; health-care providers; data providers and users; representatives of affected communities; governments at the local, state, and federal levels; and professional and private nonprofit organizations. Task B. Describe the Surveillance System to be Evaluated Activities

Discussion To construct a balanced and reliable description of the system, multiple sources of information might be needed. The description of the system can be improved by consulting with a variety of persons involved with the system and by checking reported descriptions of the system against direct observation. B.1. Describe the Public Health Importance of the Health-Related Event Under Surveillance Definition. The public health importance of a health-related event and the need to have that event under surveillance can be described in several ways. Health-related events that affect many persons or that require large expenditures of resources are of public health importance. However, health-related events that affect few persons might also be important, especially if the events cluster in time and place (e.g., a limited outbreak of a severe disease). In other instances, public concerns might focus attention on a particular health-related event, creating or heightening the importance of an evaluation. Diseases that are now rare because of successful control measures might be perceived as unimportant, but their level of importance should be assessed as a possible sentinel health-related event or for their potential to reemerge. Finally, the public health importance of a health-related event is influenced by its level of preventability (10). Measures. Parameters for measuring the importance of a health-related event---and therefore the public health surveillance system with which it is monitored---can include (7)

Efforts have been made to provide summary measures of population health status that can be used to make comparative assessments of the health needs of populations (13). Perhaps the best known of these measures are QALYs, years of healthy life (YHLs), and disability-adjusted life years (DALYs). Based on attributes that represent health status and life expectancy, QALYs, YHLs, and DALYs provide one-dimensional measures of overall health. In addition, attempts have been made to quantify the public health importance of various diseases and other health-related events. In a study that describes such an approach, a score was used that takes into account agespecific morbidity and mortality rates as well as healthcare costs (14). Another study used a model that ranks public health concerns according to size, urgency, severity of the problem, economic loss, effect on others, effectiveness, propriety, economics, acceptability, legality of solutions, and availability of resources (15). Preventability can be defined at several levels, including primary prevention (preventing the occurrence of disease or other health-related event), secondary prevention (early detection and intervention with the aim of reversing, halting, or at least retarding the progress of a condition), and tertiary prevention (minimizing the effects of disease and disability among persons already ill). For infectious diseases, preventability can also be described as reducing the secondary attack rate or the number of cases transmitted to contacts of the primary case. From the perspective of surveillance, preventability reflects the potential for effective public health intervention at any of these levels. B.2. Describe the Purpose and Operation of the Surveillance System Methods. Methods for describing the operation of the public health surveillance system include

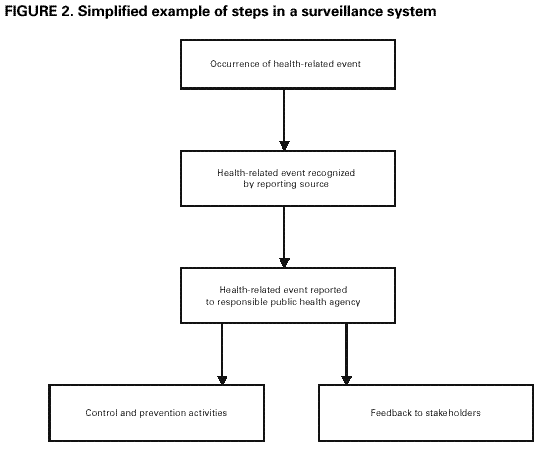

Discussion. The purpose of the system indicates why the system exists, whereas its objectives relate to how the data are used for public health action. The objectives of a public health surveillance system, for example, might address immediate public health action, program planning and evaluation, and formation of research hypotheses (see Background). The purpose and objectives of the system, including the planned uses of its data, establish a frame of reference for evaluating specific components. A public health surveillance system is dependent on a clear case definition for the health-related event under surveillance (7). The case definition of a health-related event can include clinical manifestations (i.e., symptoms), laboratory results, epidemiologic information (e.g., person, place, and time), and/or specified behaviors, as well as levels of certainty (e.g., confirmed/definite, probable/presumptive, or possible/suspected). The use of a standard case definition increases the specificity of reporting and improves the comparability of the health-related event reported from different sources of data, including geographic areas. Case definitions might exist for a variety of health-related events under surveillance, including diseases, injuries, adverse exposures, and risk factor or protective behaviors. For example, in the United States, CDC and the Council of State and Territorial Epidemiologists (CSTE) have agreed on standard case definitions for selected infectious diseases (16). In addition, CSTE publishes Position Papers that discuss and define a variety of health-related events (17). When possible, a public health surveillance system should use an established case definition, and if it does not, an explanation should be provided. The evaluation should assess how well the public health surveillance system is integrated with other surveillance and health information systems (e.g., data exchange and sharing in multiple formats, and transformation of data). Streamlining related systems into an integrated public health surveillance network enables individual systems to meet specific data collection needs while avoiding the duplication of effort and lack of standardization that can arise from independent systems (18). An integrated system can address comorbidity concerns (e.g., persons infected with human immunodeficiency virus and Mycobacterium tuberculosis); identify previously unrecognized risk factors; and provide the means for monitoring additional outcomes from a health-related event. When CDC's NEDSS is completed, it will electronically integrate and link together several types of surveillance activities and facilitate more accurate and timely reporting of disease information to CDC and state and local health departments (2). CSTE has organized professional discussion among practicing public health epidemiologists at state and federal public health agencies. CSTE has also proposed a national public health surveillance system to serve as a basis for local and state public health agencies to a) prioritize surveillance and health information activities and b) advocate for necessary resources for public health agencies at all levels (19). This national public health system would be a conceptual framework and virtual surveillance system that incorporates both existing and new surveillance systems for health-related events and their determinants. Listing the discrete steps that are taken in processing the health-event reports by the system and then depicting these steps in a flow chart is often useful. An example of a simplified flow chart for a generic public health surveillance system is included in this report (Figure 1). The mandates and business processes of the lead agency that operates the system and the participation of other agencies could be included in this chart. The architecture and data flow of the system can also be depicted in the chart (20,21). A chart of architecture and data flow should be sufficiently detailed to explain all of the functions of the system, including average times between steps and data transfers. The description of the components of the public health surveillance system could include discussions related to public health informatics concerns, including comparable hardware and software, standard user interface, standard data format and coding, appropriate quality checks, and adherence to confidentiality and security standards (9). For example, comparable hardware and software, standard user interface, and standard data format and coding facilitate efficient data exchange, and a set of common data elements are important for effectively matching data within the system or to other systems. To document the information needs of public health, CDC, in collaboration with state and local health departments, is developing the Public Health Conceptual Data Model to a) establish data standards for public health, including data definitions, component structures (e.g., for complex data types), code values, and data use; b) collaborate with national health informatics standard-setting bodies to define standards for the exchange of information among public health agencies and health-care providers; and c) construct computerized information systems that conform to established data and data interchange standards for use in the management of data relevant to public health (22). In addition, the description of the system's data management might address who is editing the data, how and at what levels the data are edited, and what checks are in place to ensure data quality. In response to HIPAA mandates, various standard development organizations and terminology and coding groups are working collaboratively to harmonize their separate systems (23). For example, both the Accredited Standards Committee X12 (24), which has dealt principally with standards for health insurance transactions, and Health Level Seven (HL7) (25), which has dealt with standards for clinical messaging and exchange of clinical information with health-care organizations (e.g., hospitals), have collaborated on a standardized approach for providing supplementary information to support health-care claims (26). In the area of classification and coding of diseases and other medical terms, the National Library of Medicine has traditionally provided the Unified Medical Language System, a metathesaurus for clinical coding systems that allows terms in one coding system to be mapped to another (27). The passage of HIPAA and the anticipated adoption of standards for electronic medical records have increased efforts directed toward the integration of clinical terminologies (23) (e.g., the merge of the College of American Pathologists' Systematized Nomenclature of Medicine [SNOMED®] [28] and the British Read Codes, the National Health Service thesaurus of health-care terms in Great Britain). The data analysis description might indicate who analyzes the data, how they are analyzed, and how often. This description could also address how the system ensures that appropriate scientific methods are used to analyze the data. The public health surveillance system should operate in a manner that allows effective dissemination of health data so that decision makers at all levels can readily understand the implications of the information (7). Options for disseminating data and/or information from the system include electronic data interchange; public-use data files; the Internet; press releases; newsletters; bulletins; annual and other types of reports; publication in scientific, peer-reviewed journals; and poster and oral presentations, including those at individual, community, and professional meetings. The audiences for health data and information can include public health practitioners, health-care providers, members of affected communities, professional and voluntary organizations, policymakers, the press, and the general public. In conducting surveillance, public health agencies are authorized to collect personal health data about persons and thus have an obligation to protect against inappropriate use or release of that data. The protection of patient privacy (recognition of a person's right not to share information about him or herself), data confidentiality (assurance of authorized data sharing), and system security (assurance of authorized system access) is essential to maintaining the credibility of any surveillance system. This protection must ensure that data in a surveillance system regarding a person's health status are shared only with authorized persons. Physical, administrative, operational, and computer safeguards for securing the system and protecting its data must allow authorized access while denying access by unauthorized users. A related concern in protecting health data is data release, including procedures for releasing record-level data; aggregate tabular data; and data in computer-based, interactive query systems. Even though personal identifiers are removed before data are released, the removal of these identifiers might not be a sufficient safeguard for sharing health data. For example, the inclusion of demographic information in a line-listed data file for a small number of cases could lead to indirect identification of a person even though personal identifiers were not provided. In the United States, CDC and CSTE have negotiated a policy for the release of data from the National Notifiable Disease Surveillance System (29) to facilitate its use for public health while preserving the confidentiality of the data (30). The policy is being evaluated for revision by CDC and CSTE. Standards for the privacy of individually identifiable health data have been proposed in response to HIPAA (3). A model state law has been composed to address privacy, confidentiality, and security concerns arising from the acquisition, use, disclosure, and storage of health information by public health agencies at the state and local levels (31). In addition, the Federal Committee on Statistical Methodology's series of Statistical Policy Working Papers includes reviews of statistical methods used by federal agencies and their contractors that release statistical tables or microdata files that are collected from persons, businesses, or other units under a pledge of confidentiality. These working papers contain basic statistical methods to limit disclosure (e.g., rules for data suppression to protect privacy and to minimize mistaken inferences from small numbers) and provide recommendations for improving disclosure limitation practices (32). A public health surveillance system might be legally required to participate in a records management program. Records can consist of a variety of materials (e.g., completed forms, electronic files, documents, and reports) that are connected with operating the surveillance system. The proper management of these records prevents a "loss of memory" or "cluttered memory" for the agency that operates the system, and enhances the system's ability to meet its objectives. B.3. Describe the Resources Used to Operate the Surveillance System Definition. In this report, the methods for assessing resources cover only those resources directly required to operate a public health surveillance system. These resources are sometimes referred to as "direct costs" and include the personnel and financial resources expended in operating the system. Methods. In describing these resources consider the following:

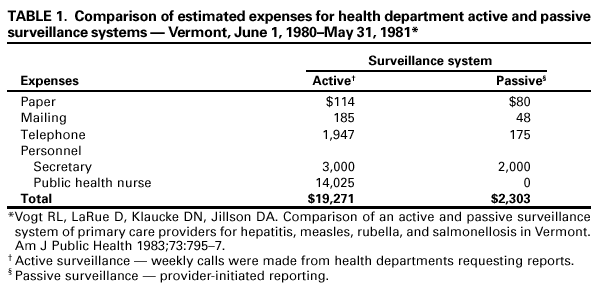

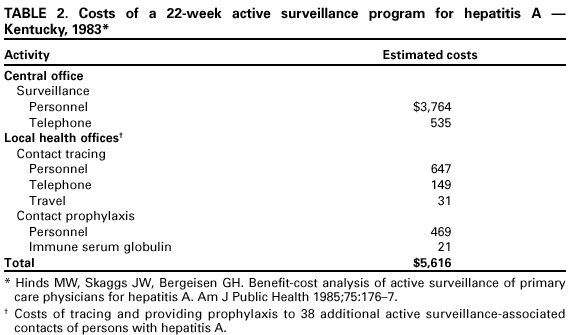

When appropriate, the description of the system's resources should consider all levels of the public health system, from the local healthcare provider to municipal, county, state, and federal health agencies. Resource estimation for public health surveillance systems have been implemented in Vermont (Table 1) and Kentucky (Table 2). Resource Estimation in Vermont. Two methods of collecting public health surveillance data in Vermont were compared (33). The passive system was already in place and consisted of unsolicited reports of notifiable diseases to the district offices or state health department. The active system was implemented in a probability sample of physician practices. Each week, a health department employee called these practitioners to solicit reports of selected notifiable diseases. In comparing the two systems, an attempt was made to estimate their costs. The estimates of direct expenses were computed for the public health surveillance systems (Table 1). Resource Estimation in Kentucky. Another example of resource estimation was provided by an assessment of the costs of a public health surveillance system involving the active solicitation of case reports of type A hepatitis in Kentucky (Table 2) (34). The resources that were invested into the direct operation of the system in 1983 were for personnel and telephone expenses and were estimated at $3,764 and $535, respectively. Nine more cases were found through this system than would have been found through the passive surveillance system, and an estimated seven hepatitis cases were prevented through administering prophylaxis to the contacts of the nine casepatients. Discussion. This approach to assessing resources includes only those personnel and material resources required for the operation of surveillance and excludes a broader definition of costs that might be considered in a more comprehensive evaluation. For example, the assessment of resources could include the estimation of indirect costs (e.g., followup laboratory tests) and costs of secondary data sources (e.g., vital statistics or survey data). The assessment of the system's operational resources should not be done in isolation of the program or initiative that relies on the public health surveillance system. A more formal economic evaluation of the system (i.e., judging costs relative to benefits) could be included with the resource description. Estimating the effect of the system on decision making, treatment, care, prevention, education, and/or research might be possible (35,36). For some surveillance systems, however, a more realistic approach would be to judge costs based on the objectives and usefulness of the system. Task C. Focus the Evaluation Design Definition The direction and process of the evaluation must be focused to ensure that time and resources are used as efficiently as possible. Methods Focusing the evaluation design for a public health surveillance system involves

Discussion Depending on the specific purpose of the evaluation, its design could be straightforward or complex. An effective evaluation design is contingent upon a) its specific purpose being understood by all of the stakeholders in the evaluation and b) persons who need to know the findings and recommendations of the design being committed to using the information generated from it. In addition, when multiple stakeholders are involved, agreements that clarify roles and responsibilities might need to be established among those who are implementing the evaluation. Standards for assessing how the public health surveillance system performs establish what the system must accomplish to be considered successful in meeting its objectives. These standards specify, for example, what levels of usefulness and simplicity are relevant for the system, given its objectives. Approaches to setting useful standards for assessing the system's performance include a review of current scientific literature on the health-related event under surveillance and/or consultation with appropriate specialists, including users of the data. Task D. Gather Credible Evidence Regarding the Performance of the Surveillance System Activities

Discussion Public health informatics concerns for public health surveillance systems (see Task B.2, Discussion) can be addressed in the evidence gathered regarding the performance of the system. Evidence of the system's performance must be viewed as credible. For example, the gathered evidence must be reliable, valid, and informative for its intended use. Many potential sources of evidence regarding the system's performance exist, including consultations with physicians, epidemiologists, statisticians, behavioral scientists, public health practitioners, laboratory directors, program managers, data providers, and data users. D.1. Indicate the Level of Usefulness Definition. A public health surveillance system is useful if it contributes to the prevention and control of adverse health-related events, including an improved understanding of the public health implications of such events. A public health surveillance system can also be useful if it helps to determine that an adverse health-related event previously thought to be unimportant is actually important. In addition, data from a surveillance system can be useful in contributing to performance measures (37), including health indicators (38) that are used in needs assessments and accountability systems. Methods. An assessment of the usefulness of a public health surveillance system should begin with a review of the objectives of the system and should consider the system's effect on policy decisions and disease-control programs. Depending on the objectives of a particular surveillance system, the system might be considered useful if it satisfactorily addresses at least one of the following questions. Does the system

A survey of persons who use data from the system might be helpful in gathering evidence regarding the usefulness of the system. The survey could be done either formally with standard methodology or informally. Discussion. Usefulness might be affected by all the attributes of a public health surveillance system (see Task D.2, Describe Each System Attribute). For example, increased sensitivity might afford a greater opportunity for identifying outbreaks and understanding the natural course of an adverse health-related event in the population under surveillance. Improved timeliness allows control and prevention activities to be initiated earlier. Increased predictive value positive enables public health officials to more accurately focus resources for control and prevention measures. A representative surveillance system will better characterize the epidemiologic characteristics of a health-related event in a defined population. Public health surveillance systems that are simple, flexible, acceptable, and stable will likely be more complete and useful for public health action. D.2. Describe Each System Attribute D.2.a. Simplicity Definition. The simplicity of a public health surveillance system refers to both its structure and ease of operation. Surveillance systems should be as simple as possible while still meeting their objectives. Methods. A chart describing the flow of data and the lines of response in a surveillance system can help assess the simplicity or complexity of a surveillance system. A simplified flow chart for a generic surveillance system is included in this report (Figure 1). The following measures (see Task B.2) might be considered in evaluating the simplicity of a system:

Discussion. Thinking of the simplicity of a public health surveillance system from the design perspective might be useful. An example of a system that is simple in design is one with a case definition that is easy to apply (i.e., the case is easily ascertained) and in which the person identifying the case will also be the one analyzing and using the information. A more complex system might involve some of the following:

Simplicity is closely related to acceptance and timeliness. Simplicity also affects the amount of resources required to operate the system. D.2.b. Flexibility Definition. A flexible public health surveillance system can adapt to changing information needs or operating conditions with little additional time, personnel, or allocated funds. Flexible systems can accommodate, for example, new health-related events, changes in case definitions or technology, and variations in funding or reporting sources. In addition, systems that use standard data formats (e.g., in electronic data interchange) can be easily integrated with other systems and thus might be considered flexible. Methods. Flexibility is probably best evaluated retrospectively by observing how a system has responded to a new demand. An important characteristic of CDC's Behavioral Risk Factor Surveillance System (BRFSS) is its flexibility (39). Conducted in collaboration with state health departments, BRFSS is an ongoing sample survey that gathers and reports state-level prevalence data on health behaviors related to the leading preventable causes of death as well as data on preventive health practices. The system permits states to add questions of their own design to the BRFSS questionnaire but is uniform enough to allow state-to-state comparisons for certain questions. These state-specific questions can address emergent and locally important health concerns. In addition, states can stratify their BRFSS samples to estimate prevalence data for regions or counties within their respective states. Discussion. Unless efforts have been made to adapt the public health surveillance system to another disease (or other health-related event), a revised case definition, additional data sources, new information technology, or changes in funding, assessing the flexibility of that system might be difficult. In the absence of practical experience, the design and workings of a system can be examined. Simpler systems might be more flexible (i.e., fewer components will need to be modified when adapting the system for a change in information needs or operating conditions). D.2.c. Data Quality Definition. Data quality reflects the completeness and validity of the data recorded in the public health surveillance system. Methods. Examining the percentage of "unknown" or "blank" responses to items on surveillance forms is a straightforward and easy measure of data quality. Data of high quality will have low percentages of such responses. However, a full assessment of the completeness and validity of the system's data might require a special study. Data values recorded in the surveillance system can be compared to "true" values through, for example, a review of sampled data (40), a special record linkage (41), or patient interview (42). In addition, the calculation of sensitivity (Task D.2.e) and predictive value positive (Task D.2.f) for the system's data fields might be useful in assessing data quality. Quality of data is influenced by the performance of the screening and diagnostic tests (i.e., the case definition) for the health-related event, the clarity of hardcopy or electronic surveillance forms, the quality of training and supervision of persons who complete these surveillance forms, and the care exercised in data management. A review of these facets of a public health surveillance system provides an indirect measure of data quality. Discussion. Most surveillance systems rely on more than simple case counts. Data commonly collected include the demographic characteristics of affected persons, details about the health-related event, and the presence or absence of potential risk factors. The quality of these data depends on their completeness and validity. The acceptability (see Task D.2.d) and representativeness (Task D.2.g) of a public health surveillance system are related to data quality. With data of high quality, the system can be accepted by those who participate in it. In addition, the system can accurately represent the health-related event under surveillance. D.2.d. Acceptability Definition. Acceptability reflects the willingness of persons and organizations to participate in the surveillance system. Methods. Acceptability refers to the willingness of persons in the sponsoring agency that operates the system and persons outside the sponsoring agency (e.g., persons who are asked to report data) to use the system. To assess acceptability, the points of interaction between the system and its participants must be considered (Figure 1), including persons with the health-related event and those reporting cases. Quantitative measures of acceptability can include

Some of these measures might be obtained from a review of surveillance report forms, whereas others would require special studies or surveys. Discussion. Acceptability is a largely subjective attribute that encompasses the willingness of persons on whom the public health surveillance system depends to provide accurate, consistent, complete, and timely data. Some factors influencing the acceptability of a particular system are

D.2.e. Sensitivity Definition. The sensitivity of a surveillance system can be considered on two levels. First, at the level of case reporting, sensitivity refers to the proportion of cases of a disease (or other health-related event) detected by the surveillance system (43). Second, sensitivity can refer to the ability to detect outbreaks, including the ability to monitor changes in the number of cases over time. Methods. The measurement of the sensitivity of a public health surveillance system is affected by the likelihood that

These situations can be extended by analogy to public health surveillance systems that do not fit the traditional disease careprovider model. For example, the sensitivity of a telephonebased surveillance system of morbidity or risk factors is affected by

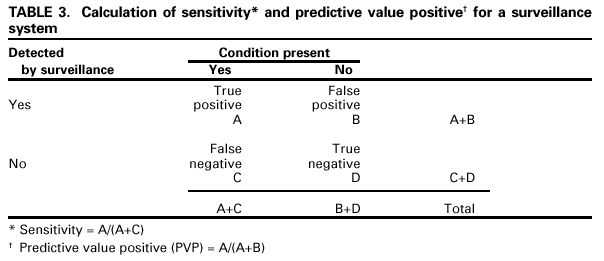

The extent to which these situations are explored depends on the system and on the resources available for assessing sensitivity. The primary emphasis in assessing sensitivity --- assuming that most reported cases are correctly classified --- is to estimate the proportion of the total number of cases in the population under surveillance being detected by the system, represented by A/(A+C) in this report (Table 3). Surveillance of vaccine-preventable diseases provides an example of where the detection of outbreaks is a critical concern (44). Approaches that have been recommended for improving sensitivity of reporting vaccine-preventable diseases might be applicable to other health-related events (44). For example, the sensitivity of a system might be improved by

The capacity for a public health surveillance system to detect outbreaks (or other changes in incidence and prevalence) might be enhanced substantially if detailed diagnostic tests are included in the system. For example, the use of molecular subtyping in the surveillance of Escherichia coli O157:H7 infections in Minnesota enabled the surveillance system to detect outbreaks that would otherwise have gone unrecognized (45). The measurement of the sensitivity of the surveillance system (Table 3) requires a) collection of or access to data usually external to the system to determine the true frequency of the condition in the population under surveillance (46) and b) validation of the data collected by the system. Examples of data sources used to assess the sensitivity of health information or public health surveillance systems include medical records (47,48) and registries (49,50). In addition, sensitivity can be assessed through estimations of the total cases in the population under surveillance by using capture-recapture techniques (51,52). To adequately assess the sensitivity of the public health surveillance system, calculating more than one measurement of the attribute might be necessary. For example, sensitivity could be determined for the system's data fields, for each data source or for combinations of data sources (48), for specific conditions under surveillance (53), or for each of several years (54). The use of a Venn diagram might help depict measurements of sensitivity for combinations of the system's data sources (55). Discussion. A literature review can be helpful in determining sensitivity measurements for a public health surveillance system (56). The assessment of the sensitivity of each data source, including combinations of data sources, can determine if the elimination of a current data source or if the addition of a new data source would affect the overall surveillance results (48). A public health surveillance system that does not have high sensitivity can still be useful in monitoring trends as long as the sensitivity remains reasonably constant over time. Questions concerning sensitivity in surveillance systems most commonly arise when changes in the occurrence of a health-related event are noted. Changes in sensitivity can be precipitated by some circumstances (e.g., heightened awareness of a health-related event, introduction of new diagnostic tests, and changes in the method of conducting surveillance). A search for such "artifacts" is often an initial step in outbreak investigations. D.2.f. Predictive Value Positive Definition. Predictive value positive (PVP) is the proportion of reported cases that actually have the health-related event under surveillance (43). Methods. The assessment of sensitivity and of PVP provide different perspectives regarding how well the system is operating. Depending on the objectives of the public health surveillance system, assessing PVP whenever sensitivity has been assessed might be necessary (47--50,53). In this report, PVP is represented by A/(A+B) (Table 3). In assessing PVP, primary emphasis is placed on the confirmation of cases reported through the surveillance system. The effect of PVP on the use of public health resources can be considered on two levels. At the level of case detection, PVP affects the amount of resources used for case investigations. For example, in some states, every reported case of type A hepatitis is promptly investigated by a public health nurse, and contacts at risk are referred for prophylactic treatment. A surveillance system with low PVP, and therefore frequent "falsepositive" case reports, would lead to misdirected resources. At the level of outbreak (or epidemic) detection, a high rate of erroneous case reports might trigger an inappropriate outbreak investigation. Therefore, the proportion of epidemics identified by the surveillance system that are true epidemics can be used to assess this attribute. Calculating the PVP might require that records be kept of investigations prompted by information obtained from the public health surveillance system. At the level of case detection, a record of the number of case investigations completed and the proportion of reported persons who actually had the health-related event under surveillance would allow the calculation of the PVP. At the level of outbreak detection, the review of personnel activity reports, travel records, and telephone logbooks might enable the assessment of PVP. For some surveillance systems, however, a review of data external to the system (e.g., medical records) might be necessary to confirm cases to calculate PVP. Examples of data sources used to assess the PVP of health information or public health surveillance systems include medical records (48,57), registries (49,58), and death certificates (59). To assess the PVP of the system adequately, calculating more than one measurement of the attribute might be necessary. For example, PVP could be determined for the system's data fields, for each data source or combinations of data sources (48), or for specific health-related events (49). Discussion. PVP is important because a low value means that noncases might be investigated, and outbreaks might be identified that are not true but are instead artifacts of the public health surveillance system (e.g., a "pseudo-outbreak"). Falsepositive reports can lead to unnecessary interventions, and falsely detected outbreaks can lead to costly investigations and undue concern in the population under surveillance. A public health surveillance system with a high PVP will lead to fewer misdirected resources. The PVP reflects the sensitivity and specificity of the case definition (i.e., the screening and diagnostic tests for the health-related event) and the prevalence of the health-related event in the population under surveillance. The PVP can improve with increasing specificity of the case definition. In addition, good communication between the persons who report cases and the receiving agency can lead to an improved PVP. D.2.g. Representativeness Definition. A public health surveillance system that is representative accurately describes the occurrence of a health-related event over time and its distribution in the population by place and person. Methods. Representativeness is assessed by comparing the characteristics of reported events to all such actual events. Although the latter information is generally not known, some judgment of the representativeness of surveillance data is possible, based on knowledge of

Representativeness can be examined through special studies that seek to identify a sample of all cases. For example, the representativeness of a regional injury surveillance system was examined using a systematic sample of injured persons (62). The study examined statistical measures of population variables (e.g., age, sex, residence, nature of injury, and hospital admission) and concluded that the differences in the distribution of injuries in the system's database and their distribution in the sampled data should not affect the ability of the surveillance system to achieve its objectives. For many health-related events under surveillance, the proper analysis and interpretation of the data require the calculation of rates. The denominators for these rate calculations are often obtained from a completely separate data system maintained by another agency (e.g., the United States Bureau of the Census in collaboration with state governments [63]). The choice of an appropriate denominator for the rate calculation should be given careful consideration to ensure an accurate representation of the health-related event over time and by place and person. For example, numerators and denominators must be comparable across categories (e.g., race [64], age, residence, and/or time period), and the source for the denominator should be consistent over time when measuring trends in rates. In addition, consideration should be given to the selection of the standard population for the adjustment of rates (65). Discussion. To generalize findings from surveillance data to the population at large, the data from a public health surveillance system should accurately reflect the characteristics of the health-related event under surveillance. These characteristics generally relate to time, place, and person. An important result of evaluating the representativeness of a surveillance system is the identification of population subgroups that might be systematically excluded from the reporting system through inadequate methods of monitoring them. This evaluation process enables appropriate modification of data collection procedures and more accurate projection of incidence of the health-related event in the target population (66). For certain health-related events, the accurate description of the event over time involves targeting appropriate points in a broad spectrum of exposure and the resultant disease or condition. In the surveillance of cardiovascular diseases, for example, it might be useful to distinguish between preexposure conditions (e.g., tobacco use policies and social norms), the exposure (e.g., tobacco use, diet, exercise, stress, and genetics), a pre-symptomatic phase (e.g., cholesterol and homocysteine levels), early-staged disease (e.g., abnormal stress test), late-staged disease (e.g., angina and acute myocardial infarction), and death from the disease. The measurement of risk factor behaviors (e.g., tobacco use) might enable the monitoring of important aspects in the development of a disease or other health-related event. Because surveillance data are used to identify groups at high risk and to target and evaluate interventions, being aware of the strengths and limitations of the system's data is important. Errors and bias can be introduced into the system at any stage (67). For example, case ascertainment (or selection) bias can result from changes in reporting practices over time or from differences in reporting practices by geographic location or by health-care providers. Differential reporting among population subgroups can result in misleading conclusions about the health-related event under surveillance. D.2.h. Timeliness Definition. Timeliness reflects the speed between steps in a public health surveillance system. Methods. A simplified example of the steps in a public health surveillance system is included in this report (Figure 2). The time interval linking any two of these steps can be examined. The interval usually considered first is the amount of time between the onset of a health-related event and the reporting of that event to the public health agency responsible for instituting control and prevention measures. Factors affecting the time involved during this interval can include the patient's recognition of symptoms, the patient's acquisition of medical care, the attending physician's diagnosis or submission of a laboratory test, the laboratory reporting test results back to the physician and/or to a public health agency, and the physician reporting the event to a public health agency. Another aspect of timeliness is the time required for the identification of trends, outbreaks, or the effect of control and prevention measures. Factors that influence the identification process can include the severity and communicability of the health-related event, staffing of the responsible public health agency, and communication among involved health agencies and organizations. The most relevant time interval might vary with the type of health-related event under surveillance. With acute or infectious diseases, for example, the interval from the onset of symptoms or the date of exposure might be used. With chronic diseases, it might be more useful to look at elapsed time from diagnosis rather than from the date of symptom onset. Discussion. The timeliness of a public health surveillance system should be evaluated in terms of availability of information for control of a health-related event, including immediate control efforts, prevention of continued exposure, or program planning. The need for rapidity of response in a surveillance system depends on the nature of the health-related event under surveillance and the objectives of that system. A study of a public health surveillance system for Shigella infections, for example, indicated that the typical case of shigellosis was brought to the attention of health officials 11 days after onset of symptoms --- a period sufficient for the occurrence of secondary and tertiary transmission. This example indicates that the level of timeliness was not satisfactory for effective disease control (68). However, when a long period of latency occurs between exposure and appearance of disease, the rapid identification of cases of illness might not be as important as the rapid availability of exposure data to provide a basis for interrupting and preventing exposures that lead to disease. For example, children with elevated blood lead levels and no clinically apparent illness are at risk for adverse health-related events. CDC recommends that follow-up of asymptomatic children with elevated blood lead levels include educational activities regarding lead poisoning prevention and investigation and remediation of sources of lead exposure (69). In addition, surveillance data are being used by public health agencies to track progress toward national and state health objectives (38,70). The increasing use of electronic data collection from reporting sources (e.g., an electronic laboratory-based surveillance system) and via the Internet (a web-based system), as well as the increasing use of electronic data interchange by surveillance systems, might promote timeliness (6,29,71,72). D.2.i. Stability Definition. Stability refers to the reliability (i.e., the ability to collect, manage, and provide data properly without failure) and availability (the ability to be operational when it is needed) of the public health surveillance system. Methods. Measures of the system's stability can include

Discussion. A lack of dedicated resources might affect the stability of a public health surveillance system. For example, workforce shortages can threaten reliability and availability. Yet, regardless of the health-related event being monitored, a stable performance is crucial to the viability of the surveillance system. Unreliable and unavailable surveillance systems can delay or prevent necessary public health action. A more formal assessment of the system's stability could be made through modeling procedures (73). However, a more useful approach might involve assessing stability based on the purpose and objectives of the system. Task E. Justify and State Conclusions, and Make Recommendations Conclusions from the evaluation can be justified through appropriate analysis, synthesis, interpretation, and judgement of the gathered evidence regarding the performance of the public health surveillance system (Task D). Because the stakeholders (Task A) must agree that the conclusions are justified before they will use findings from the evaluation with confidence, the gathered evidence should be linked to their relevant standards for assessing the system's performance (Task C). In addition, the conclusions should state whether the surveillance system is addressing an important public health problem (Task B.1) and is meeting its objectives (Task B.2). Recommendations should address the modification and/or continuation of the public health surveillance system. Before recommending modifications to a system, the evaluation should consider the interdependence of the system's costs (Task B.3) and attributes (Task D.2). Strengthening one system attribute could adversely affect another attribute of a higher priority. Efforts to improve sensitivity, PVP, representativeness, timeliness, and stability can increase the cost of a surveillance system, although savings in efficiency with computer technology (e.g., electronic reporting) might offset some of these costs. As sensitivity and PVP approach 100%, a surveillance system is more likely to be representative of the population with the event under surveillance. However, as sensitivity increases, PVP might decrease. Efforts to increase sensitivity and PVP might increase the complexity of a surveillance system --- potentially decreasing its acceptability, timeliness, and flexibility. In a study comparing health-department--initiated (active) surveillance and providerinitiated (passive) surveillance, for example, the active surveillance did not improve timeliness, despite increased sensitivity (61). In addition, the recommendations can address concerns about ethical obligations in operating the system (74). In some instances, conclusions from the evaluation indicate that the most appropriate recommendation is to discontinue the public health surveillance system; however, this type of recommendation should be considered carefully before it is issued. The cost of renewing a system that has been discontinued could be substantially greater than the cost of maintaining it. The stakeholders in the evaluation should consider relevant public health and other consequences of discontinuing a surveillance system. Task F. Ensure Use of Evaluation Findings and Share Lessons Learned Deliberate effort is needed to ensure that the findings from a public health surveillance system evaluation are used and disseminated appropriately. When the evaluation design is focused (Task C), the stakeholders (Task A) can comment on decisions that might affect the likelihood of gathering credible evidence regarding the system's performance. During the implementation of the evaluation (Tasks D and E), considering how potential findings (particularly negative findings) could affect decisions made about the surveillance system might be necessary. When conclusions from the evaluation and recommendations are made (Task E), follow-up might be necessary to remind intended users of their planned uses and to prevent lessons learned from becoming lost or ignored. Strategies for communicating the findings from the evaluation and recommendations should be tailored to relevant audiences, including persons who provided data used for the evaluation. In the public health community, for example, a formal written report or oral presentation might be important but not necessarily the only means of communicating findings and recommendations from the evaluation to relevant audiences. Several examples of formal written reports of surveillance evaluations have been included in peer-reviewed journals (51,53,57,59,75). SUMMARYThe guidelines in this report address evaluations of public health surveillance systems. However, these guidelines could also be applied to several systems, including health information systems used for public health action, surveillance systems that are pilot tested, and information systems at individual hospitals or health-care centers. Additional information can also be useful for planning, establishing, as well as efficiently and effectively monitoring a public health surveillance system (6--7). To promote the best use of public health resources, all public health surveillance systems should be evaluated periodically. No perfect system exists; however, and tradeoffs must always be made. Each system is unique and must balance benefit versus personnel, resources, and cost allocated to each of its components if the system is to achieve its intended purpose and objectives. The appropriate evaluation of public health surveillance systems becomes paramount as these systems adapt to revised case definitions, new health-related events, new information technology (including standards for data collection and sharing), current requirements for protecting patient privacy, data confidentiality, and system security. The goal of this report has been to make the evaluation process inclusive, explicit, and objective. Yet, this report has presented guidelines --- not absolutes --- for the evaluation of public health surveillance systems. Progress in surveillance theory, technology, and practice continues to occur, and guidelines for evaluating a surveillance system will necessarily evolve. References

Table 1  Return to top. Figure 1  Return to top. Table 2  Return to top. Figure 2  Return to top. Table 3  Return to top. All MMWR HTML versions of articles are electronic conversions from ASCII text into HTML. This conversion may have resulted in character translation or format errors in the HTML version. Users should not rely on this HTML document, but are referred to the electronic PDF version and/or the original MMWR paper copy for the official text, figures, and tables. An original paper copy of this issue can be obtained from the Superintendent of Documents, U.S. Government Printing Office (GPO), Washington, DC 20402-9371; telephone: (202) 512-1800. Contact GPO for current prices. **Questions or messages regarding errors in formatting should be addressed to mmwrq@cdc.gov.Page converted: 8/22/2001 |

|||||||||

This page last reviewed 7/19/2001

|